Last Update:

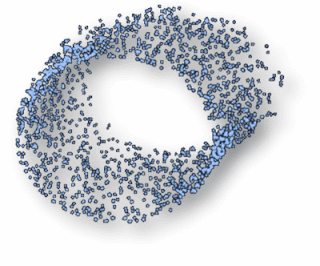

Flexible particle system - OpenGL Renderer

Table of Contents

As I wrote in the Introduction to the particle series, I’ve got only a simple particle renderer. It uses position and color data with one attached texture. In this article you will find the renderer description and what problems we have with our current implementation.

The Series

- Initial Particle Demo

- Introduction

- Particle Container 1 - problems

- Particle Container 2 - implementation

- Generators & Emitters

- Updaters

- Renderer (this post)

- Introduction to Optimization

- Tools Optimizations

- Code Optimizations

- Renderer Optimizations

- Summary

Introduction

The gist is located here: fenbf / ParticleRenderer

The renderer’s role is, of course, to create pixels from our data. I

tried to separate rendering from animation and thus I have

IParticleRenderer interface. It takes data from ParticleSystem and

uses it on the GPU side. Currently I have only GLParticleRenderer.

A renderer does not need all of the particle system data. This implementation uses only color and position.

The “renderer - animation” separation gives a lot of flexibility. For

instance, for performance tests, I’ve created an EmptyRenderer and

used the whole system as it is - without changing even one line of code!

Of course I got no pixels on the screen, but I was able to collect

elapsed time data. Same idea can be applied for Unit Testing.

The Renderer Interface

class IParticleRenderer

{

public:

IParticleRenderer() { }

virtual ~IParticleRenderer() { }

virtual void generate(ParticleSystem *sys, bool useQuads) = 0;

virtual void destroy() = 0;

virtual void update() = 0;

virtual void render() = 0;

};

useQuads are currently not used. If it is set to true then it means to

generate quads - not points. This would increase amount of memory sent

to the GPU.

How to render particles using OpenGL

Shaders

#version 330

uniform mat4x4 matModelview;

uniform mat4x4 matProjection;

layout(location = 0) in vec4 vVertex;

layout(location = 1) in vec4 vColor;

out vec4 outColor;

void main()

{

vec4 eyePos = matModelview * gl_Vertex;

gl_Position = matProjection * eyePos;

outColor = vColor;

float dist = length(eyePos.xyz);

float att = inversesqrt(0.1f*dist);

gl_PointSize = 2.0f * att;

}

The above vertex shader uses color and position. It computes

gl_Position and gl_PointSize.

Fragment shaders is quite trivial, so I will not paste code here :)

OpenGL Particle Renderer Implementation

Update()

void GLParticleRenderer::update()

{

const size_t count = m_system->numAliveParticles();

if (count > 0)

{

glBindBuffer(GL_ARRAY_BUFFER, m_bufPos);

float *ptr = (float *)(m_system->finalData()->m_pos.get());

glBufferSubData(GL_ARRAY_BUFFER, 0, count*sizeof(float)* 4, ptr);

glBindBuffer(GL_ARRAY_BUFFER, m_bufCol);

ptr = (float*)(m_system->finalData()->m_col.get());

glBufferSubData(GL_ARRAY_BUFFER, 0, count*sizeof(float)* 4, ptr);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

}

As you can see, update() takes needed data and update renderer’s

buffers.

Render()

void GLParticleRenderer::render()

{

const size_t count = m_system->numAliveParticles();

if (count > 0)

{

glBindVertexArray(m_vao);

glDrawArrays(GL_POINTS, 0, count);

glBindVertexArray(0);

}

}

plus the whole context:

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D, gParticleTexture);

glEnable(GL_PROGRAM_POINT_SIZE);

mProgram.use();

mProgram.uniformMatrix4f("matProjection", camera.projectionMatrix);

mProgram.uniformMatrix4f("matModelview", camera.modelviewMatrix);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE);

gpuRender.begin();

gCurrentEffect->render(); // << our render() method

gpuRender.end();

glDisable(GL_BLEND);

mProgram.disable();

The problems

The OpenGL renderer is simple and it works. But unfortunately, it is not the ideal and production ready code! Here is a list of things to improve:

- buffer updates: just a simplest method right now. It could be

improved by using mapping and double buffering.

- a lot of buffer techniques can be found in this great post series at thehacksoflife: When Is Your VBO Double Buffered?

- texture ID in the renderer - as a member, not outside! Additionally we could think about using texture atlas and a new parameter for a particle - texID. That way each particle could use different texture.

- only point rendering. There is this variable

useQuads, but maybe it would be better to use geometry shader to generate quads.- quads would allow us to easily rotate particles.

- Lots of great ideas about particle rendering can be found under this stackoverflow question: Point Sprites for particle system

CPU to GPU

Actually, the main problem in the system is the CPU side and the memory transfer to GPU. We loose not only via data transfer, but also because of synchronization. GPU sometimes (or even often) needs to wait for previous operations to finish before it can update buffers.

It was my initial assumption and a deign choice. I am aware that, even if I optimize the CPU side to the maximum level, I will not be able to beat “GPU only” particle system. We have, I believe, lots of flexibility, but some performance is lost.

What’s Next

This post finishes ‘implementation’ part of the series. We have the animation system and the renderer, so we can say that, ‘something works’. Now we can take a look at optimizations! In the next few posts (I hope I will end before end of the year :)), I will cover improvements that made this whole system running something like 50% (of the initial speed). We’ll see how it ends.

Read next: Introduction to Optimization

Questions

What do you think about the design?

What methos could be used to improve rendering part? Some advanced

modern OpenGL stuff?

I've prepared a valuable bonus for you!

Learn all major features of recent C++ Standards on my Reference Cards!

Check it out here: